Accelerating SD Turbo and SDXL Turbo Inference with ONNX Runtime and Olive

With ONNX Runtime and Olive, users can easily accelerate SD Turbo and SDXL Turbo models to generate viable images in as little as one step!

With ONNX Runtime and Olive, users can easily accelerate SD Turbo and SDXL Turbo models to generate viable images in as little as one step!

Learn how ONNX Runtime can speed up LLaMA-2 inference by up to 4.5X

Everything you need to know about running PyTorch models on the edge with ONNX Runtime.

Learn more on how ONNX Runtime helps users accelerate open source machine learning models from Hugging Face.

October 4th, 2023

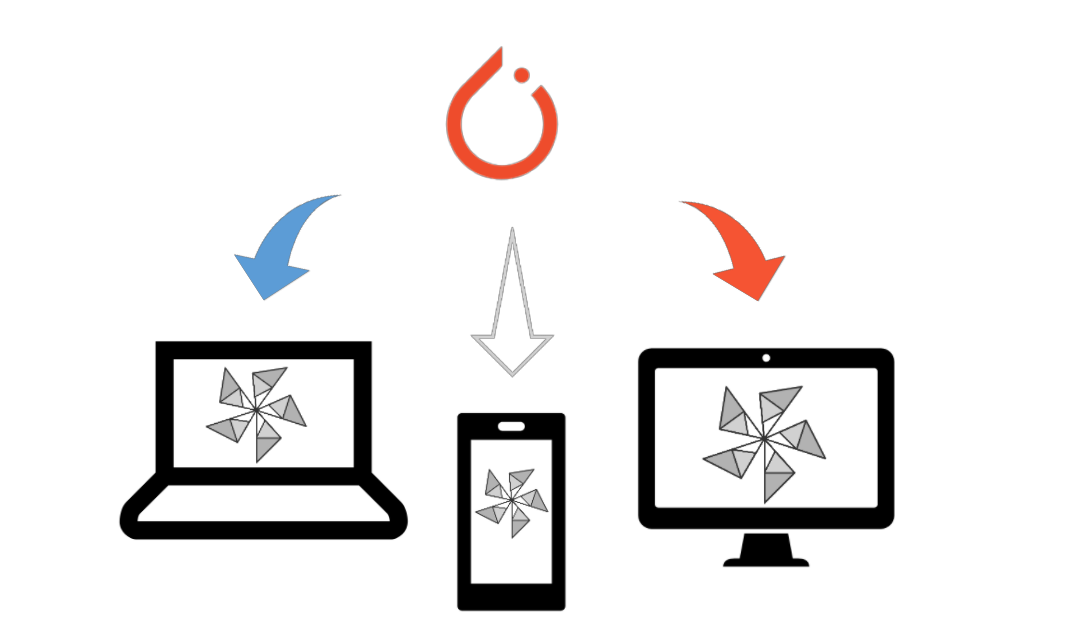

This blog presents technical details of On-Device training with ONNX Runtime. It explains how On-Device Training works and what are the different steps and artifacts involved in the training process. This information will help you train your models on edge devices.

July 5th, 2023

Learn how ONNX Runtime accelerates Whisper and makes it easy to deploy on desktop, mobile, in the cloud, and even in the browser.

June 7th, 2023

This blog introduces On-Device Training to enable training models on edge devices with the data available on-edge. It extends ORT Inference on edge to include federated learning and personalization scenarios.

May 31st, 2023

This blog reviews the new capabilities of ONNX Runtime and the Olive toolchain to support hybrid inferencing, NPU EPs, and hardware aware model optimizations on Windows and other platforms

May 23th, 2023

This blog reviews AI in Windows 11, including ONNX Runtime as the gateway to Windows AI and new ONNX Runtime capabilities on Windows

May 23th, 2023

This blog shows how to use Olive to optimize models for DML EP in ONNX Runtime

May 23th, 2023

This blog shows how to use the Stable Diffusion model on DML EP using Olive to optimize the Stable Diffusion model

May 23th, 2023

This blog shows how to accelerate the Stable Diffusion models from Hugging Face on NVIDIA and AMD GPUs with ONNX Runtime. It includes benchmark results obtained on A100 and RTX3060 and MI250X.

May 10th, 2023

ACPT provides a ready-to-use distributed training environment for users to run on the latest multi-node GPU infrastructure offered in Azure. With Nebula, a new fast checkpointing capability in ACPT, you can save your checkpoints 1000 times faster with a simple API that works asynchronously with your training process.

March 22nd, 2023

Enabling scenarios through the usage of Deep Neural Network (DNN) models is critical to our AI strategy at Oracle, and our Cloud AI Services team has built a solution to serve DNN models for customers in the healthcare sector. In this blog post, we’ll share challenges our team faced, and how ONNX Runtime solves these as the backbone of success for high-performance inferencing.

March 15th, 2023

In this tutorial we will learn how to do inferencing for the popular Stable Diffusion deep learning model in C#. Stable Diffusion models take a text prompt and create an image that represents the text.

March 9th, 2023

VSR in Microsoft Edge builds on top of ONNX Runtime and DirectML making our solution portable across GPU vendors and allowing VSR to be available to more users. Additional graphics cards which support these technologies and have sufficient computing power will receive support in the future. The ONNX Runtime and DirectML teams have fine-tuned their technology over many years, resulting in VSR making the most of the performance and capabilities of your graphics card’s processing power.

March 8th, 2023

Over the past year, OctoML engineers worked closely with Watch For to design and implement the TVM Execution Provider (EP) for ONNX Runtime - bringing the model optimization potential of Apache TVM to all ONNX Runtime users. This builds upon the collaboration we began in 2021, to bring the benefits of TVM’s code generation and flexible quantization support to production scale at Microsoft.

March 2nd, 2023

On-device machine learning model serving is a difficult task, especially given the limited bandwidth of early-stage startups. This guest post from the team at Pieces shares the problems and solutions evaluated for their on-device model serving stack and how ONNX Runtime serves as their backbone of success.

February 8th, 2023

In this blog, we will discuss one of the ways to make huge models like BERT smaller and faster with OpenVINO™ Neural Networks Compression Framework (NNCF) and ONNX Runtime with OpenVINO™ Execution Provider through Azure Machine Learning.

January 25th, 2023

Hugging Face’s Optimum library, through its integration with ONNX Runtime for training, provides an open solution to improve training times by 35% or more for many popular Hugging Face models. We present details of both Hugging Face Optimum and the ONNX Runtime Training ecosystem, with performance numbers highlighting the benefits of using the Optimum library.

January 24th, 2023

Choosing which machine learning model to use, sharing a model with a colleague, and quickly trying out a model are all reasons why you may find yourself wanting to quickly run inference on a model. You can configure your environment and download Jupyter notebooks, but it would be nicer if there was a way to run a model with even less effort...

June 6, 2022

Transformer-based models have revolutionized the natural language processing (NLP) domain. Ever since its inception, transformer architecture has been integrated into models like Bidirectional Encoder Representations from Transformers (BERT) and Generative Pre-trained Transformer (GPT) for performing tasks such as text generation or summarization and question and answering to name a few...

May 2, 2022

Scale, performance, and efficient deployment of state-of-the-art Deep Learning models are ubiquitous challenges as applied machine learning grows across the industry. We’re happy to see that the ONNX Runtime Machine Learning model inferencing solution we’ve built and use in high-volume Microsoft products and services also resonates with our open source community, enabling new capabilities that drive content relevance and productivity...

April 19, 2022

ONNX Runtime now supports building mobile applications in C# with Xamarin. Support for Android and iOS is included in the ONNX Runtime release 1.10 NuGet package. This enables C# developers to build AI applications for Android and iOS to execute ONNX models on mobile devices with ONNX Runtime...

December 14, 2021

We are introducing ONNX Runtime Web (ORT Web), a new feature in ONNX Runtime to enable JavaScript developers to run and deploy machine learning models in browsers. It also helps enable new classes of on-device computation. ORT Web will be replacing the soon to be deprecated onnx.js...

September 2, 2021

ONNX Runtime (ORT) for PyTorch accelerates training large scale models across multiple GPUs with up to 37% increase in training throughput over PyTorch and up to 86% speed up when combined with DeepSpeed...

July 13, 2021

With a simple change to your PyTorch training script, you can now speed up training large language models with torch_ort.ORTModule, running on the target hardware of your choice. Training deep learning models requires ever-increasing compute and memory resources. Today we release torch_ort.ORTModule, to accelerate distributed training of PyTorch models, reducing the time and resources needed for training...

July 13, 2021

ONNX Runtime is an open-source project that is designed to accelerate machine learning across a wide range of frameworks, operating systems, and hardware platforms. Today, we are excited to announce a preview version of ONNX Runtime in release 1.8.1 featuring support for AMD Instinct™ GPUs facilitated by the AMD ROCm™ open software platform...

July 13, 2021

Large-scale transformer models, such as GPT-2 and GPT-3, are among the most useful self-supervised transformer language models for natural language processing tasks such as language translation, question answering, passage summarization, text generation, and so on...

June 30, 2021